Hadoop Components – Architecture, Core Components, Ecosystem, & More

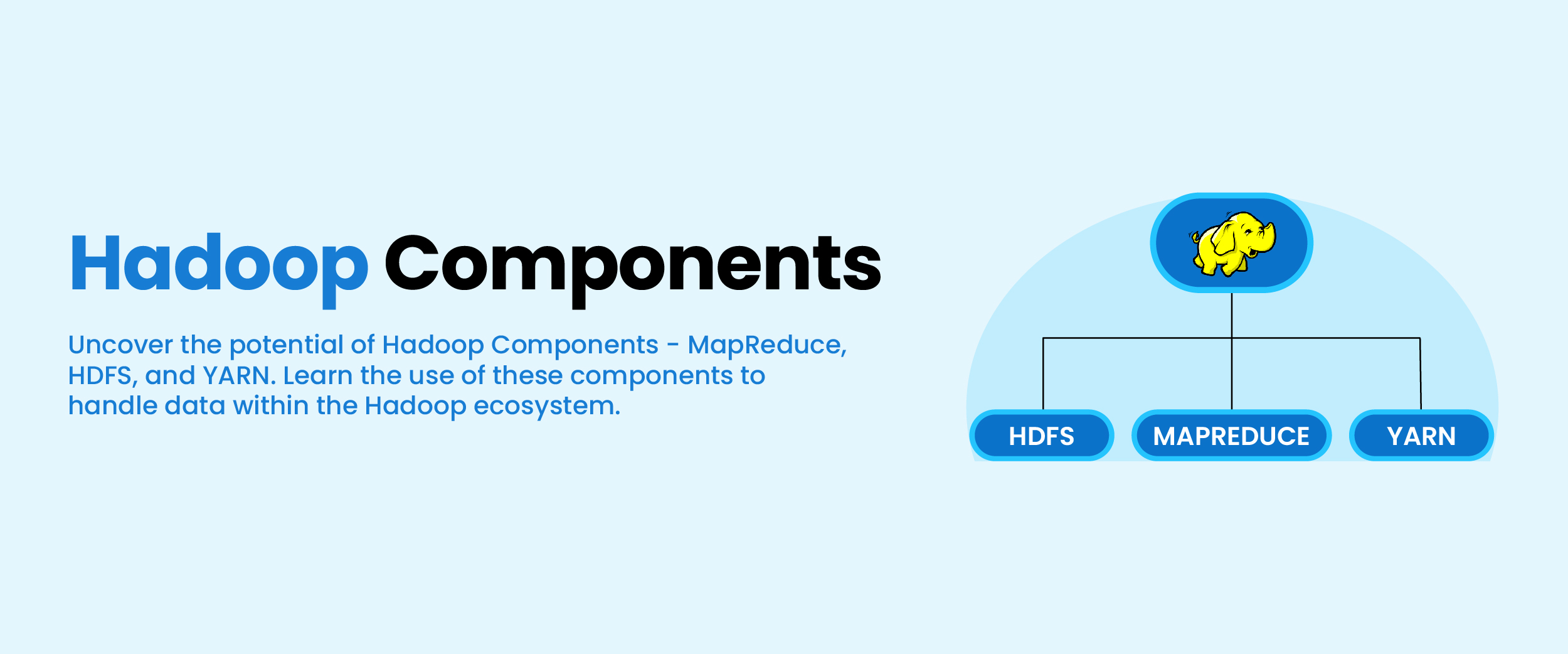

In today’s digital era, big data is revolutionizing industries. The mammoth task of handling big data has been made easy with the fast-paced data processing and distributed storage framework of Hadoop. It is endowed with high-end capabilities for processing a vast expanse of data with the help of its core components: HDFS, MapReduce, and YARN. Hadoop’s ecosystem is a constellation of tools that facilitate various processes such as live streaming data ingestion, query and analysis of data, transfer of data, etc. This comprehensive guide on Hadoop components will boost your overall knowledge of Hadoop and big data.

What is Hadoop?

Hadoop is an open-source framework for organizing and storing large amounts of data across a network of computers through the use of parallel processing and efficient distributed data storage and retrieval. It can manage massive volumes of data by disseminating tasks and data over multiple cluster nodes. Because of its distributed architecture and scalable design, Hadoop enables enterprises to successfully manage big data concerns.

Hadoop and Big Data

Before digitalization, computer systems processed small amounts of data in documents like Word and Excel, using a single processing unit and memory for storage and processing. With the advent of the Internet, the need for data processing and storage increased massively. Instead of documents or rows and columns, various types of data have now evolved, including structured data (mainly databases), semi-structured data, and unstructured data such as audio, video, images, and email. All this data together forms ‘big data’.

As a means to handle distributed storage and big data processing, the Hadoop framework was developed. The architecture of Hadoop consists of a collection of software utilities and components that facilitate the handling of Big Data.

You may enroll in an online advanced Excel course to gain a solid database understanding and data analysis skills to help you learn Hadoop data analysis-related tools faster.

Architecture and Core Components of Hadoop

The three core components of Hadoop: HDFS, MapReduce, and YARN form its architecture. They are specifically designed to work with big data. In this section, we will discuss Hadoop’s architectural features in more detail.

I. HDFS Component

It stands for “Hadoop Distributed File System” and is the storage unit in Hadoop. The storage of Big Data is distributed across many computers and stored in blocks. HDFS creates copies of data stored on multiple systems using the replication method. This prevents data loss if one of the nodes fails and makes HDFS fault tolerant.

It comprises the following:

1. Storage Nodes in HDFS

The Hadoop Distributed File System (HDFS) stores data in a cluster of physical workstations or servers called storage nodes. These nodes are in charge of holding and managing the actual data blocks that make up the files kept in the cluster.

- NameNode (Master): The NameNode is the master in the Hadoop cluster that guides the DataNode (Slaves). It stores the metadata, which contains transaction logs, file names, size, location information, etc. It assists in locating the nearest DataNode for more rapid communication and directs them on activities such as deleting, creating, and replicating.

- DataNode (Slave): DataNodes serve as Slave nodes. They are used to store data in a Hadoop cluster and range in number from one to 500 or more. An increased number of DataNodes allows the Hadoop cluster to store more data.

- Secondary NameNode: It is not a backup, but a buffer and standby for the NameNode as it stores intermediate updates when the NameNode is inactive.

2. File Block in HDFS

Blocks are the storage units for data in HDFS. The size of one block is 128 megabytes (MB) by default and can be changed manually. For example, to store 550 MB of data, HDFS partitions the 550 MB of data into blocks, with the first four blocks containing 128 MB each, and the remaining 38 megabytes in the fifth block. This process is performed across the data nodes in the cluster.

II. MapReduce Component

The Java programming language models MapReduce, the data processing software unit. It performs distributive and parallel processing of data. This makes Hadoop a fast-working software. MapReduce’s approach differs from traditional processing methods, where all data types were processed on a single unit. It produces the resulting output, which is aggregated for better performance.

1. How do the MapReduce tasks (Map and Reduce) work?

The big data Input is passed to the Map () function, and its output is passed to the Reduce () function. Later, the final output is received. MapReduce is divided into two tasks: The map task and the reduce task.

a. Map Task

The input data blocks are divided into key-value pairs known as Tuples by the Map() function. The Map task involves:

- RecordReader: It breaks the record and provides key-value pairs in a Map () function. In key-value pairs, the ‘key’ refers to the record’s location information, and the ‘value’ refers to the data that goes with it.

- Map () Function: This is a user-defined function that processes the key-value pairs (Tuples) from the record reader.

- Combiner: In the Map workflow, its function is to group the data. Its use is optional.

b. Reduce Task

The key-value pairs serve as inputs to the Reduce () function. Upon receiving broken Tuples or key-value pairs, it combines them based on their Key value and performs the following operations:

- Shuffle and Sort: The Reduce () function begins with this step of shuffling and sorting. It shuffles and sorts the data based on key-value pairs.

- Reduce: It aggregates them into groups of similar key-value pairs.

- OutputFormat: After all operations are completed, key-value pairs are inserted into the file using the record writer, starting on a new line and separated by spaces.

III. YARN Component

The acronym YARN stands for “Yet Another Resource Negotiator”. It is a resource management and job scheduling unit. This Hadoop component manages the resources to run the processed data effectively. The data processing results of MapReduce are run on the Hadoop cluster simultaneously, and each of them needs some resources to complete the task. This is done with the help of a set of resources such as RAM, network bandwidth, and the CPU.

To process job requests and manage cluster resources in Hadoop, it consists of the following components:

1. Resource Manager

The resource manager serves as the master and assigns resources.

2. Node Manager

The node manager aids the resource manager and is responsible for handling the nodes and resource usage in the nodes.

3. Application Manager

The application manager is responsible for requesting containers from the node manager. Once the node manager gets the resources, he sends them to the resource manager.

4. Container

It is a collection of physical resources that conduct the actual processing of data.

Hadoop Ecosystem

The Hadoop ecosystem comprises various tools that enable the components to perform their functions.

In its ecosystem, Hadoop components use the following tools:

1. Data Storage

Other than HDFS, which provides data storage, Hadoop also has:

- HCatalog: It provides tabular data storage.

- Zookeeper: It handles Hadoop cluster configuration, naming standards, and synchronizations as a centralized open-source server.

- Oozie: It is a distributed scheduler that coordinates and schedules complicated processes sequentially to efficiently complete them.

2. General-Purpose Execution Engines

Along with MapReduce, it includes other data processing frameworks such as:

- Spark: It is an agile, in-memory cluster computing system capable of real-time data streaming, ETL (extract, transform, and load), and micro-batch processing.

- Tez: It is a high-performance batch and interactive data processing framework that executes MapReduce processes collectively for exponential processing.

3. Database Management Tools

This consists of Hadoop components that deal with database management:

- Hive: It is an Apache Software Foundation-developed data warehouse for SQL queries, ETL operations, and OLAP, offering fast, scalable, and extensible capabilities.

- Spark SQL: It is a distributed query engine for structured data processing, enabling uniform data access, and compatibility with Hive, Parquet files, and RDDs.

- Impala: It is an in-memory query processing engine.

- Apache Drill: It is a low-latency distributed query engine.

- HBase: This is a non-relational distributed database for random access to distributed data.

4. Data Abstraction Engines

Hadoop components also deal with data abstraction engines such as:

- Pig: It is a high-level scripting language used to create complicated data transformations, ETL processes, and massive dataset analyses.

- Apache Sqoop: It is a data ingesting tool between relational databases in a network, enabling structured data import and export.

5. Real-Time Data Streaming Tools

Some real-time data streaming tools in Hadoop are:

- Spark Streaming: This supports live data processing streams.

- Kafka: This is an open-source data stream processing software with Publish, Subscribes, and Consumer models.

- Flume: This software manages large data logs.

6. Graph Processing Engines

This includes two graph processing frameworks:

- Giraph: It primarily analyzes social media data and uses Hadoop’s MapReduce implementation to process graphs.

- GraphX: It is responsible for parallel-graph computation.

7. Machine Learning Engines

Here are a few examples of machine learning engines used by Hadoop components:

- H2O: It is a distributed machine learning platform for big data analytics, enabling linear scalability and fitting thousands of models to discover patterns.

- Oryx: It is a large-scale machine learning tier with Lambda architecture, utilizing Apache Hadoop for continuous model building.

- Spark MLlib: It is a machine learning library designed to aid machine learning operations in Spark.

- Mahout: It supports in implementation of distributed machine learning algorithms.

8. Cluster Management

The management of Hadoop’s clusters is done with tools such as:

- Ambari: This is a Hadoop cluster management software that allows for easy installation, configuration, and management of large-scale clusters.

Conclusion

Hadoop has completely transformed the way big data is managed. Across industries, this software framework has emerged as the most popular and favorite for handling big data. Because of this, there is a growing need for Hadoop experts. Learn as much as you can about the Hadoop components if you want to become a data scientist, data analyst, or software developer.