Top 10 Features of Hadoop – The Complete Guide with Core Components

Data is information that gives insight for better decision-making. Digitalization has led to the use of big data everywhere. Big Data has created an impact across the top industries including healthcare, banking, insurance, education, online commerce, and so on. A minimal amount of resources, such as a single processing unit or limited storage, are insufficient to manage massive data. It necessitates high-end processing and storage across networks, with users having simple and speedy access. Hadoop is the solution for the smooth processing of big data.

This blog will take you through a brief description of what is Hadoop and its three components, covering the essential features of Hadoop in detail.

Hadoop – An Overview

Hadoop is a software framework that works with big data and is Java-encoded. It enables the processing of volumes of data and allows distributed storage across the nodes in the cluster. A cluster in Hadoop is a collection of nodes. Nodes are commodity hardware (computers) linked together to perform parallel data processing. Additionally, it has three components – HDFS, MapReduce, and YARN that unify in the seamless delivery of all the functions performed by Hadoop.

Core Components of Hadoop

Hadoop’s components are the main constituents of the entire architecture. The distinctive features of Hadoop components aid in performing the essential tasks of big data processing. There are three components to Hadoop –

1. HDFS

This acronym expands to ‘Hadoop Distributed File System’. It acts as the storage layer on Hadoop’s framework. The blocks store the data, and each block has a default size of 128 MB and is changeable manually. It consists of two types of nodes that work on master-slave architecture, namely –

- NameNode (Master): It oversees and delegates responsibilities to slave nodes. It executes filesystem operations like creation, deletion, and renaming files.

- DataNode (Slave): It houses the actual data and creates, replicates, and deletes data blocks as Namenode instructions.

2. MapReduce

The MapReduce component is the data processing unit. It entails two tasks –

- Map Task – It takes the data input, processes the data, and maps similar data types together.

- Reduce Task – It reduces the similar data mapped by the map task into smaller chunks to produce an aggregated output.

3. YARN

It means “Yet Another Resource Negotiator”. As the name implies, it is the resource management and job scheduling unit. It manages the physical resources like containers required for data processing by assigning coordination jobs among the resource manager, application manager, and node manager.

Hadoop’s ecosystem uses various data analytical tools that deal with SQL-style Hadoop data framework elements. To boost your knowledge of SQL, you may take up an online SQL course.

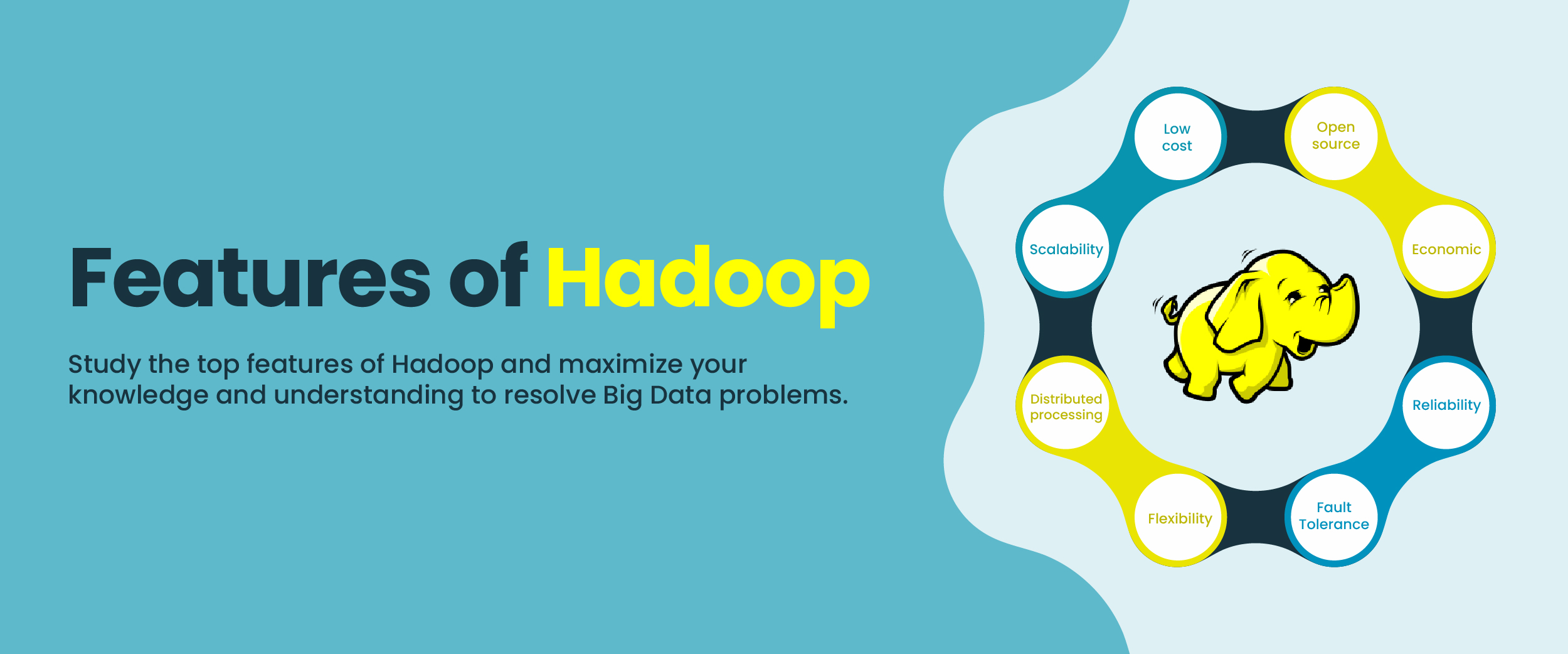

Key Features of Hadoop

Several Hadoop features simplify the handling of large amounts of data. Let us explore the range of fundamental features of Hadoop that make it preferred by professionals in big data and various industries. They are as follows –

1. Open-Source Framework

Hadoop is a free and open-source framework. That means the source code is freely available online to everyone. This code can be customized to meet the needs of businesses.

2. Cost-Effectiveness

Hadoop is a cost-effective model because it makes use of open-source software and low-cost commodity hardware. Numerous nodes make up the Hadoop cluster. These nodes are a collection of commodity hardware (servers or physical workstations). They are also reasonably priced and offer a practical way to store and process large amounts of data.

3. High-Level Scalability

The Hadoop cluster is both horizontally and vertically scalable. Scalability refers to –

- Horizontal Scalability: This means the addition of any number of nodes in the cluster.

- Vertical Scalability: This means an increase in the hardware capacity (data storage capacity) of the nodes.

This high-level and flexible scalability of Hadoop offers powerful processing capabilities.

4. Fault Tolerance

Hadoop uses a replication method to store copies of data in each block on different machines (nodes). This replication mechanism makes Hadoop a fault-tolerant framework because if any of the machines fail or crash, a replica copy of the same data can be accessed on other machines. With the more recent Hadoop 3 version, the fault tolerance feature has been enhanced. It employs a replication mechanism called ‘Erasure Coding’ to provide fault tolerance while using less space, with a storage overhead of no more than 50 percent.

5. High-Availability of Data

The high availability of data and fault tolerance features are complementary to each other. The fault tolerance feature provides data availability at any time if any of the DataNode or NameNode fails or crashes. A copy of the same data is available on either of these three platforms. Let us see how –

Case 1: Availability of Data in Case of a DataNode Failure

Even if a data node fails, the cluster is still accessible to users in the following ways –

- HDFS (Hadoop Distributed File System) stores copies of files on different nodes, and by default, the DataNodes send NameNode heartbeat signals every three seconds.

- The DataNode is considered dead by the NameNode if it does not receive a heartbeat signal after a specified time (10 minutes).

- Replication of data begins when NameNodes instruct DataNodes that already have a copy of the data to duplicate it on additional DataNodes.

- When a user seeks access, NameNode sends it the IP address of the nearest DataNode that has the requested data.

- If the requested DataNode cannot be accessed, NameNode directs the user to another DataNode that has the same data.

Case 2: Availability of Data in Case of NameNode Failure

To use the file system in the cluster, access to NameNode is required. There are two NameNode configurations – active and passive. An active NameNode is a running node, whereas the passive NameNode is the standby node in the cluster. The passive NameNode assumes responsibility for providing uninterrupted client service if the currently active NameNode fails.

6. Data-Reliability

Data reliability in Hadoop is because of the –

- Replication mechanism in HDFS (Hadoop Distributed File System), which creates a duplicate of each block, HDFS reliably stores data on the nodes.

- Block Scanner, Volume Scanner, Disk Checker, and Directory Scanner are built-in mechanisms provided by the framework itself to ensure data reliability.

7. Faster Data Processing

Hadoop has overcome traditional data processing challenges which were slow and sluggish. Enough resources were not available to process the data smoothly and fast. The distributive storage property of the Hadoop cluster of nodes enables faster data processing of huge amounts of data at lightning speed.

8. Data Locality

This is one of the most distinctive features of Hadoop. The MapReduce component has a feature called data locality. This attribute helps by placing calculation logic close to where the actual data is located on the node. As a result, network congestion is reduced and system performance is improved overall.

9. Possibility of Processing All Types of Data

The Hadoop framework can process all types of data – structured, semi-structured, and unstructured that includes databases, images, videos, audio, graphics, etc. It makes it possible for the users to work with any kind of data for analysis and interpretation irrespective of their format or size. In the Hadoop ecosystem, various tools like Apache Hive, Pig, Sqoop, Zookeeper, GraphX, etc., can process the data.

10. Easy Operability

Hadoop is an open-source software framework written in Java. To work on it, users must be familiar with programming languages such as SQL, Java, and others. The framework handles the complete data processing and storage dissemination process. Therefore, it is easy to operate on it.

Conclusion

In the age of Big Data, it is hard to imagine any company without Hadoop applications. The features of Hadoop mentioned in this blog demonstrate how vital the use of Hadoop has become when dealing with large amounts of data. Learn to utilize Hadoop to improve your software development abilities.